10 conseils pour obtenir les meilleures réponses de l'IA

Craft AI vous livre ses secrets pour interroger votre IA de manière maîtrisée afin de révéler son plein potentiel.

This article is an introduction to the paper written by Hugo Koubbi, Matthieu Boussard and Louis Hernandez, from Craft AI R&D Team.

The LoRA algorithm seems to be the most widespread fine-tuning method for LLMs. LoRA reduces parameter count by employing low-rank matrix factorization on attention mechanisms. In “The Impact of LoRA on the Emergence of Clusters in Transformers” with Matthieu Boussard and Louis Hernandez, we study the impact of LoRA on tokens.

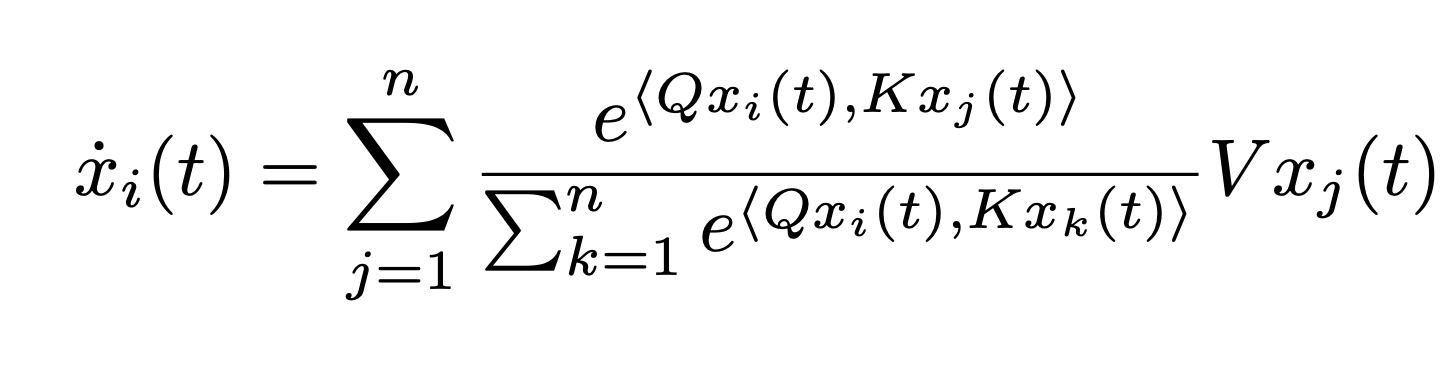

Based on the framework proposed by Sander et al. we have adopted a simplified Transformers architecture. In line with the concept of neural ODEs, we view the layers as time, and the tokens as an interacting particle system.

In “The emergence of clusters in self-attention dynamics” and “A mathematical perspective on Transformers” Geskovski et al. demonstrated that the dynamic asymptotically leads to the formation of token clusters. In this study, we investigate the impact of LoRA fine-tuning on cluster formation in Transformers.

We establish short-term stability results for attention matrix parameters. Our findings reveal that even if long-term dynamics of tokens with the same initial conditions diverge, on a short-term scale, the two trajectories are close.

If only the attention matrix V is changed, the tokens of the modified trajectory initially follow a similar pattern to the original dynamics before diverging towards a new clustering (Original dynamic on the left and LoRA dynamic on the right).

.gif)

We also establish a theoretical upper bound on the time of formation of the second cluster. Additionally, we numerically investigate this bound for optimality.

Motivated by empirical and theoretical results on the bias of $ QK^{T} $ attention matrices towards low-rank matrices, we studied clustering structures in this case. We have highlighted new clustering structures.

We have also shown in a simplified framework how LoRA can be used to create new clustering structures (LoRA dynamic on the left and the original on the right).

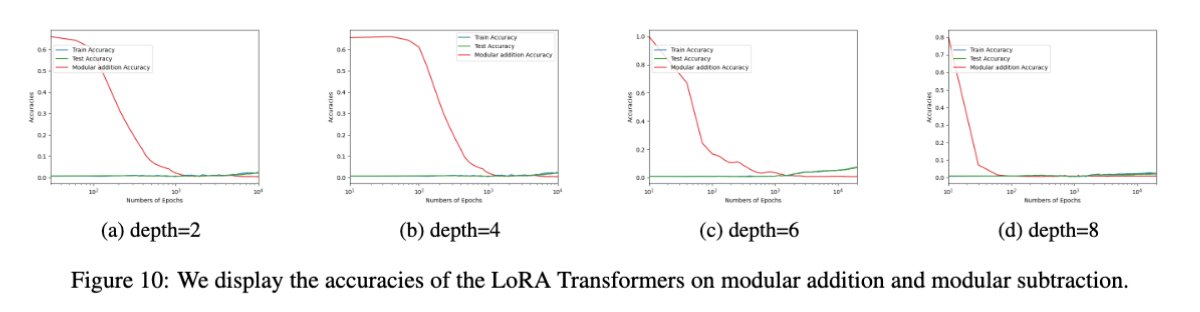

We also trained the architecture considered in the article on modular addition, and then fine-tuned it for modular subtraction. As an illustration of our results, the deeper the model, the faster the accuracy decreases during fine-tuning.

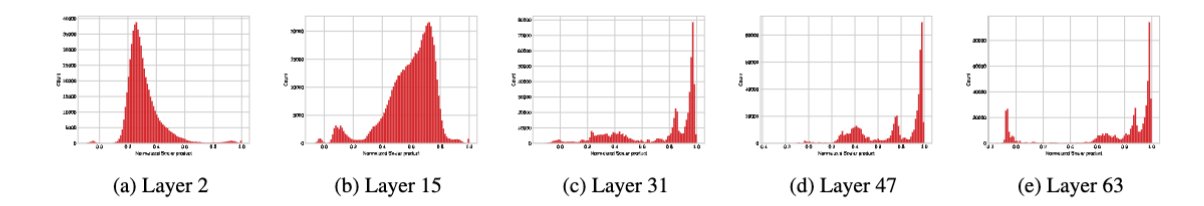

Even if our hypotheses are rather simplistic, it seems that the clustering phenomenon also appears in LLMs such as Llama2 7B.