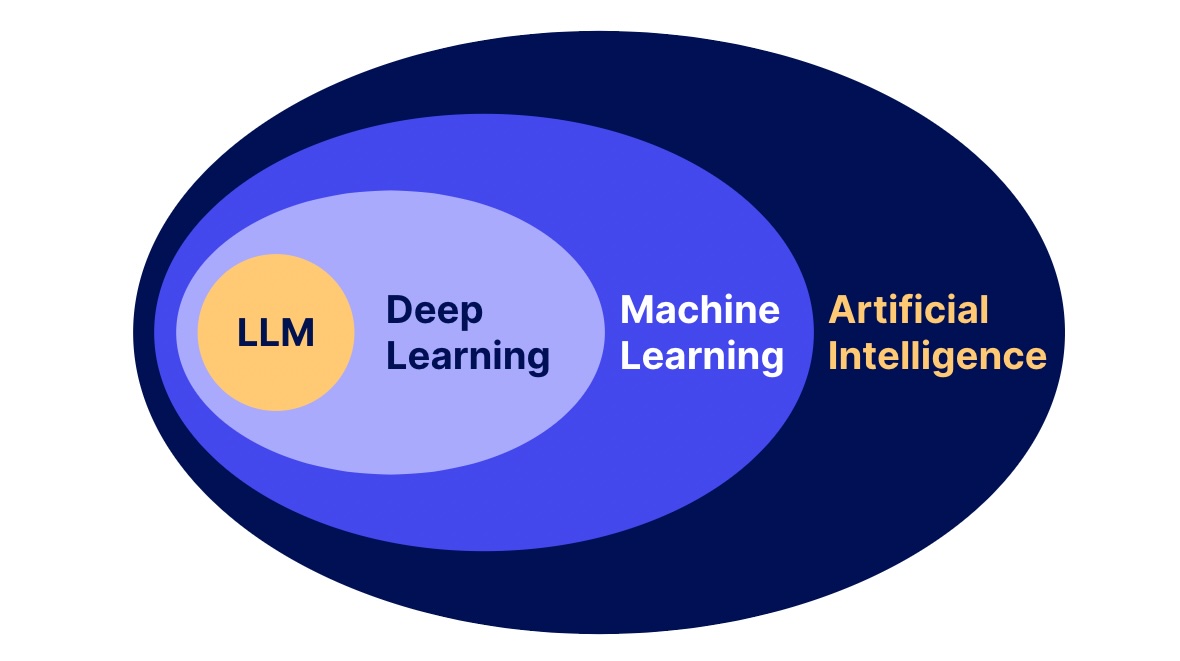

From RAGs to riches: How LLMs can be more reliable for knowledge intensive tasks

In this article, we cover how RAGs make LLMs more reliable, efficient, trustworthy and flexible, by diving into the different components of the architecture, from the embedding model to the LLM.